Can I have a .NET package manager?

April 18, 2010 at 08:28 PM | categories: NPackage | View CommentsInsipired by source code packaging systems like Haskell Cabal, I'd like a standard tool for publishing shared code. I've seen teams take a variety of sub-optimal approaches, including: building everything from source each time; building binaries once then referencing them from a network share; and building binaries then checking them into source control.

As a developer, what I'd like to be able to do is to declare the external libraries that my code depends on. I'd like it to work the same way for third-party code (say, NUnit and log4net) as for my own code (say, a library that gets re-used across several apps on the same team). There should be a standard format for publishing libraries, but there should be minimal effort involved in pulling one of these libraries into my code.

What I propose is:

- One or more central package sites. One could be a public web site that maintains a list of open-source libraries; teams could host their own for internal libraries. These internal sites could just be directories in the file system or in source control. Package sites just contain enough information to find the right binaries or source code -- the binaries and sources themselves don't have to live at the same site.

- A command line app that updates packages to the current version, either by downloading binaries or by downloading and building source code. This would run on individual developers' PCs and on a continuous integration server.

- A tool for keeping Visual Studio project references up to date. I spend far too much time in the Project References dialog -- or editing .csproj files by hand -- to fix broken reference paths and libraries accidentally referenced from a developer's GAC.

I don't know of anything that solves the problem as cleanly as in other languages. Am I missing something?

Movable Type on EC2

April 18, 2010 at 08:00 PM | categories: Uncategorized | View CommentsI'm a big fan of virtual servers and I've always run this web site from one. Until recently I had it on a VMware instance on my home PC, although my recent experience with Amazon EC2 and a couple of large traffic spikes prompted me to move it.

In the end the process turned out to be pretty easy:

- Back up to Amazon S3 using duplicity:

- MySQL dump:

mysqldump --all-databases - /etc/apache2

- /var/www

- Start an EC2 small instance running AMI Ubuntu 9.04 (ami-ccf615a5)

- Restore from Amazon S3

apt-get -y install apache2 duplicity libapache2-mod-perl2 libdbd-mysql-perl libdbi-perl mysql-server perlmagick python-boto- Restore MySQL dump, /etc/apache2 and /var/www using duplicity

- Run MySQL script against the local instance

- Start Apache. Check whether the static HTML pages and Movable Type's admin interface work.

- Assign an Amazon elastic IP address to the EC2 instance. This gives me a static IP address that I can refer to from DNS.

- Remap the DNS alias (an A record and a CNAME record) via my ISP's web site

- Done!

I'm happy with the changes so far:

- Performance has been fine: although publishing the site now takes 30 seconds not 15, I'm getting much better response times and bandwidth

- I'm paying to run an EC2 instance full time whereas before I was just paying for home power bills

- I'm not going to get shot by my ISP next time one of my posts appears on Reddit

The fact that I was taking daily backups made the move risk-free. It took a couple of attempts to get a working site on the EC2 server, but I was able to start a fresh instance and restore from backup each time. I also know that, if the site does fall over in future, restoring from backup will take a few minutes and I'll lose one day of data at most.

Cherry Blossom in Jubilee Place

April 12, 2010 at 09:07 AM | categories: Uncategorized | View Comments

Jubilee Place Cherry Blossom - 2

Originally uploaded by Tim Robinson

Summer has almost arrived in London -- I took this at the weekend in one of the parks close to where I work.

A render farm in Haskell

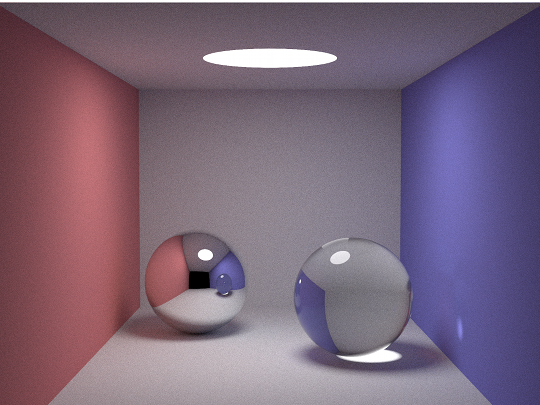

March 07, 2010 at 07:40 PM | categories: Uncategorized | View CommentsBrowse the full source of the ray tracer on GitHub

A few weeks back I came across the smallpt ray tracer by Kevin Beason. smallpt is a global illumination renderer written in 99 lines of C++. Since it uses a Monte Carlo technique to randomly cast rays and average the results, the longer you can leave it running, the better the image will look. Kevin's code uses OpenMP to split calculations across more than one CPU core.

Since ray tracing is the sort of mathsy task that Haskell excels at, I wanted to see how what a Haskell port of Kevin's code looked like. That code itself isn't the subject of this article, because what I wanted to write about instead are the techniques you can use to distribute Haskell code across multiple cores. These techniques range from parMap (which is almost transparent to the program, but provides little control over where the code runs) to brute-force distribution of work across several machines.

parMap

One of the things I love about Haskell is that its pure functional nature makes it straightforward to parallelise code within a single machine. You can normally take any code implemented in terms of map and parallelise it with parMap, from the Control.Parallel.Strategies module. parMap will use lightweight threads provided by the runtime to apply a function to each element in a list in parallel.

The parMap function accepts an argument that controls the evaluation strategy. Most of the examples I've seen use parMap rwhnf ("weak head normal form"), which only evaluates the outside of a data structure; the insides of your data are evaluated lazily, and this doesn't necessarily happen on one of the parallel lightweight threads as intended.

To evaluate anything more complicated than a list of simple values in you'll probably need to use parMap rdeepseq, which recurses inside your data on the lightweight thread so that it's fully evaluated by the time parMap finishes. rdeepseq is defined in terms of the Control.DeepSeq module, and if you've defined your own types, you'll find you need to implement NFData.

A final note on parMap: don't forget to compile with the -threaded flag, then run your program with +RTS -Nx, where x is the number of CPU cores you want to distribute across.

forkIO

Haskell supports good old-fashioned manual concurrency with functions in the Control.Concurrent module. This works broadly the same as in other languages:

- Use

forkIOto start an IO computation in parallel with the current thread - Threads can communicate through objects called MVars: create a new one using

newEmptyMVarornewMVar - MVars can be empty, or they can hold at most one value:

- Read a value from an MVar using

takeMVar. This will block if the MVar is currently empty. - Write a value to an MVar using

putMVar. This will block if the MVar is currently full. CallingputMVarwakes up one of the threads that's blocked insidetakeMVar.

forkIO starts a computation in parallel and lets you essentially forget about it; mvars are an effective way of encapsulating mutable state shared between more than one thread. What I like about this is that there's no error-prone manual locking like you'd find in, say, Java or .NET. Although forkIO and mvars give you full control over thread scheduling, they're not drop-in replacements for sequential calculations in the same way as parMap.

Brute force

The previous two techniques rely on the multi-threading capabilities of the Haskell runtime. They know nothing of what's happening outside of a single Haskell process, which limits them to the number of cores present in the machine. To go beyond that, separate instances of your program will need to communicate with each other over a network.

In my Haskell port of smallpt, I used this technique to run as many instances of the program as needed: one process per core on the local machine, and one process on each of a range of instances running on Amazon EC2. The communication protocol is as simple as possible: plain text over pipes. I used ssh to communicate with the EC2 instances, so from the outside there's no difference between a local instance and one running on the cloud.

The algorithm goes as follows:

- Establish the work you need to do. In this case, a work item is one line in the final rendered image.

- Split the work into tasks you can give to the worker processes. For simplicity, I'm splitting the work equally to give one task to each worker.

- Launch the workers and provide them with the task inputs. Since workers accept plain text, I take a

Workobject andhPrintit to a pipe. - When each worker finishes, collect its output. Outputs will come back in an unpredictable order.

- When all the workers are done, sort all of the outputs into the same order as the inputs

The coordinator is the program you launch from the command line, and it deals with things like command-line parsing, executing the distribution algorithm above, and writing a .png format image at the end. The worker processes are themselves instances of the smallpt executable, launched with the -r flag. In effect what each smallpt -r does is:

-- Render one line of the scene and return it as a list of pixels

line :: (Floating a, Ord a, Random a) => Context a -> Int -> [Vec a]

runWorker = interact (show . map line . read)

One potential flaw in this is that, because you're communicating over plain text, the Haskell type system won't trap any errors: there isn't necessarily any link between the types in the coordinator and the types in the worker. The interface to the distribution code consists of a Coordinator a b record, where a is the type for inputs to the workers, and b is the type that the workers produce as output:

data (Show a, Read b) => Coordinator a b =

Coordinator { submitWork :: [String] -> [a] -> IO [b],

runWorker :: IO () }

coordinator :: (Read a, Show a, Read b, Show b) => (a -> b) -> Coordinator a b

coordinator worker =

Coordinator { submitWork = submitWork',

runWorker = interact (show . map worker . read) }

Most of the multi-threading code lives inside the submitWork' function which implements the algorithm above:

- Given a list of worker commands lines (

[String]) and the work itself ([a]), produce a list of tuples of workers and tasks - Using

forkIO, fork one thread per worker. Inside each of these threads: - Start the worker process itself (via

createProcess shell) - Write an

[a]to its standard input - Read a

[b]from its standard output - Put the

[b]into an mvar shared between all worker threads - Back in the coordinator thread, call

takeMVaronce for each worker. This produces a list of results as a[[b]]. - Once all the workers have finished, collapse the results into a

[b], making sure the output list comes back in the same order as the original inputs. If any of the workers encountered an error, useioErrorto fail the whole operation.

This approach works remarkably well for this simple ray tracer demo. But it has some serious flaws that you'd want to avoid in a production system:

- Any failed worker process invalidates the whole set of results. Even in the absence of software bugs, machines can fail any time (consider how often disks fail when you've got 4,000 of them). The coordinator should recognise transient errors and retry those tasks; it might choose to take workers out of rotation if they fail repeatedly.

- All machines are assumed to be equally powerful. This is rarely the case: for instance, workers running on my Mac here finish far sooner than ones on my small Amazon instances.

- All work is assumed to take the same length of time to complete. Even in this simple ray tracer, plain diffuse surfaces are much easier to calculate than reflective (shiny) ones or refractive (transparent) ones.

- Tasks are allocated up front. If a worker finishes early -- maybe because it's running on a Mac Pro, or because it generated a line of empty space -- it can't have any more work allocated to it.

- Workers can't spawn other workers. In my code the worker function isn't declared in the IO monad, so it can't interact with anything. Even if it could, it would need to know about all of the other workers so that it could pick an idle core to do work on.

Next time I'll talk about some of the code in the ray tracer itself, as well as some approaches you'd use in practice to correct these flaws in the demo.

An improved spelling corrector in Haskell

November 01, 2009 at 11:09 AM | categories: Uncategorized | View CommentsMy previous post came up on the Haskell Reddit yesterday, and I got some great suggestions for improvements to the program, which I've checked into GitHub.

-

Map.fromListWithreplacesList.foldl'andMap.insertWith'.fromListWithappears to be specifically designed for aggregating values that share a common key (in .NET terminology it's theGroupByfunction). -

I'll commonly use a construct like

readFile "big.txt" >>= return . train . lowerWords, to bind a monad valuereadFile "big.txt"to a function(train . lowerWords), then produce a new monad value withreturn. A shorter way to write this isfmap (train . lowerWords) (readFile "big.txt"), or, with an import from Control.Applicative,(train . lowerWords <$> readFile "big.txt"). -

You can replace the lambda syntax

(\w -> Map.lookup w nwords)with an operator section,('Map.lookup' nwords). You might see this more commonly as(+1)in place of(\x -> x + 1); it's the same thing. Edit: the challenging Markdown syntax means that you'll have to imagine the single quotes aroundMap.lookupreplaced with backticks, `.

I should have realised this at the time, but it didn't occur to me that you can use full pattern-matching syntax in the right-hand side of a Haskell list comprehension. If the match fails on some element then that element is filtered out. We can use this to improve on the Python version of the edits1 function: whereas the Python version combines its b[0] subscripts with an if b check, the Haskell version can use pattern matching to do both. We can also use the inits and tails functions to build substrings instead of brute-force applications of take and drop.

# Python def edits1(word): s = [(word[:i], word[i:]) for i in range(len(word) + 1)] deletes = [a + b[1:] for a, b in s if b] transposes = [a + b[1] + b[0] + b[2:] for a, b in s if len(b)>1] replaces = [a + c + b[1:] for a, b in s for c in alphabet if b] inserts = [a + c + b for a, b in s for c in alphabet] return set(deletes + transposes + replaces + inserts) > -- Haskell > edits1 word = let s = zip (inits word) (tails word) > deletes = [ a ++ y | (a, _:y ) <- s ] > transposes = [ a ++ y:x:z | (a, x:y:z) <- s ] > replaces = [ a ++ c:y | (a, _:y ) <- s, c <- alphabet ] > inserts = [ a ++ c:x | (a, x ) <- s, c <- alphabet ] > in Set.fromList $ concat [ deletes, transposes, replaces, inserts ]

The edits1 function is the densest one in both the Python and Haskell versions. It's the one where the intent of the code was least clear to me when I originally saw it, probably because of the extra clutter in the list comprehensions. In the latest Haskell revision it's more obvious what's going on.

I've consistently used the names x, y and z for the first three parts of the word's suffix. Because they consistently stand for the same thing, any inconsistencies jump out at you when you read the code:

a ++ ystands out becausexis missing; it's been deleted. (xisn't even mentioned in the pattern match.)a ++ y:x:zstands out becausexandyare the wrong way round; we're transposing thema ++ c:ystands out because we've gotcinstead ofx;xhas been replaced. (Again,xhas dropped out of the pattern match.)a ++ c:xhascin front ofx; it's been inserted

After I'd written the first Haskell version I was skeptical. The Python version uses some typical Python idioms, like if b for checking for empty strings, using the same syntax for accessing lists and sets, and overloading the or operator to look for the first non-empty set, and Haskell doesn't have these same shortcuts. However the latest set of revisions makes the the core algorithm in the Haskell version more readable than the Python equivalent.

« Previous Page -- Next Page »